-

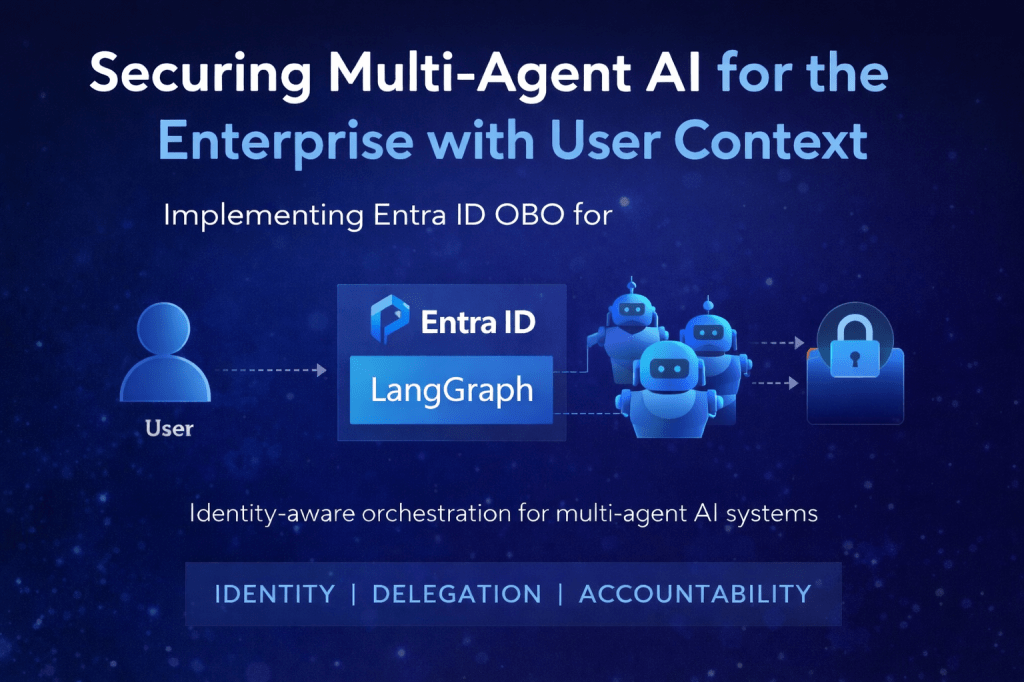

This is the first of two posts. This one focuses on the technical aspects; the next will address the CXOs perspective, providing a comprehensive unified point of view. Introduction When building AI-powered applications for the enterprise, a common challenge emerges: how do you maintain user identity and access controls when an AI agent queries backend…

-

The future of work is changing rapidly with the rise of AI Agents in the enterprise. From user-friendly, no-code platforms like Copilot Studio that empower citizen developers, to complex agentic AI-driven pipelines orchestrating multiple agents on the backend, AI is transforming how business applications and processes are designed, deployed, and scaled across every industry vertical.…

-

Industry: Energy Location: North America Executive Summary: AI-Driven Multi-Agent Knowledge and IT Support Solution for an Energy Industry Firm A North American energy company sought to modernize its legacy knowledge and IT support chatbot, which was underperforming across key metrics. The existing system, built on static rules and scripts, delivered slow and often inaccurate responses,…

-

Overview. The broadening of conventional data engineering pipelines and applications to include document extraction and preprocessing for unstructured PDFs, audio, and video files is becoming more prevalent. This shift is propelled by the increasing demand for advanced generative AI applications in businesses, adhering to the RAG (Retrievable Augmented Generation) model. In this post, I will…

-

I just completed work on the digital transformation, design, development, and delivery of a cloud native data solution for one of the biggest professional sports organizations in north America. In this post, I want to share some thoughts on the selected architecture and why we settled on it This Architecture was chosen to meet the…

-

Overview. Ingesting, storing and processing millions of telemetry data from a plethora of remote IoT devices and Sensors has become common place. One of the primary Cloud services used to process streaming telemetry events at scale is Azure Event Hub. Most documented implementations of Azure Databricks Ingestion from Azure Event Hub Data are based on…

-

Overview. At the time of this writing, there doesn’t seem to be built-in support for writing PySpark Structured Streaming query metrics from Azure Databricks to Azure Log Analytics. After some research, I found a work around that enables capturing the Streaming query metrics as a Python dictionary object from within a notebook session and publishing…

-

In this post, I will attempt to capture the steps taken to load data from Azure Databricks deployed with VNET Injection (Network Isolation) into an instance of Azure Synapse DataWarehouse deployed within a custom VNET and configured with a private endpoint and private DNS. Deploying these services, including Azure Data Lake Storage Gen 2 within…

-

Use Case. In this post, I will share my experience evaluating an Azure Databricks feature that hugely simplified a batch-based Data ingestion and processing ETL pipeline. Implementing an ETL pipeline to incrementally process only new files as they land in a Data Lake in near real time (periodically, every few minutes/hours) can be complicated. Since…

-

Subscribe

Subscribed

Already have a WordPress.com account? Log in now.